Microsoft AI Without the Security Headaches

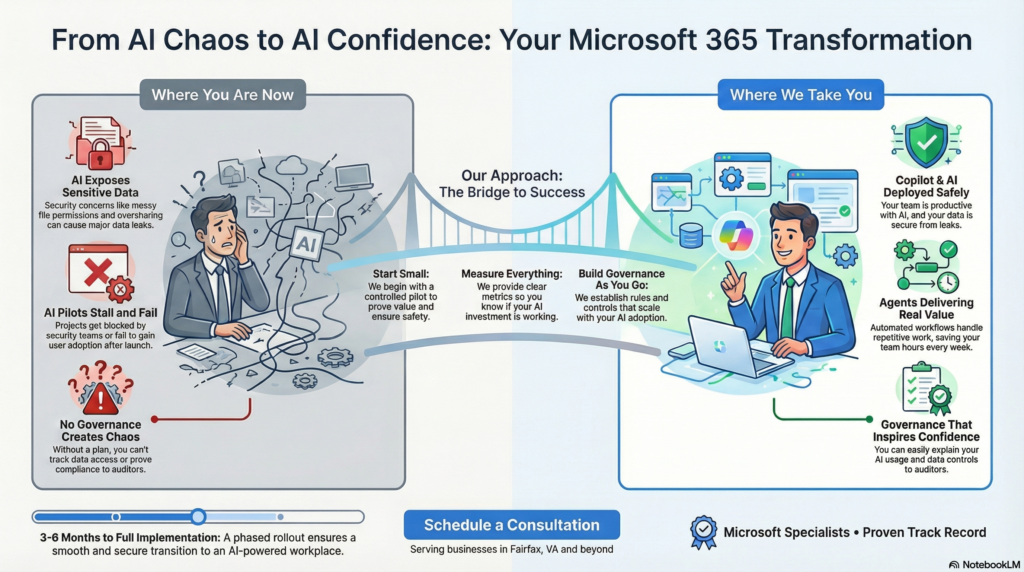

Your team wants Copilot and modern AI tools. Your security team wants your data locked down. We help you roll out Copilot Azure AI Agents safely, with clear guardrails.

For small and mid-sized businesses (50–500 employees) using Microsoft 365

🧩What We Do

We help you deploy Microsoft AI tools the right way—so your team gets more done without creating security risks.

Three things we fix:

- Security concerns – Copilot surfacing sensitive data to the wrong people

- Permission problems – Files shared with too many people, broken access controls

- Stalled pilots – AI projects that never move past testing

What you get:

- ✅ Safe Microsoft Copilot rollouts with proper controls

- ✅ Custom AI agents that handle repetitive work

- ✅ Azure AI solutions that work with your actual business data

- ✅ Compliance and governance built in from the start

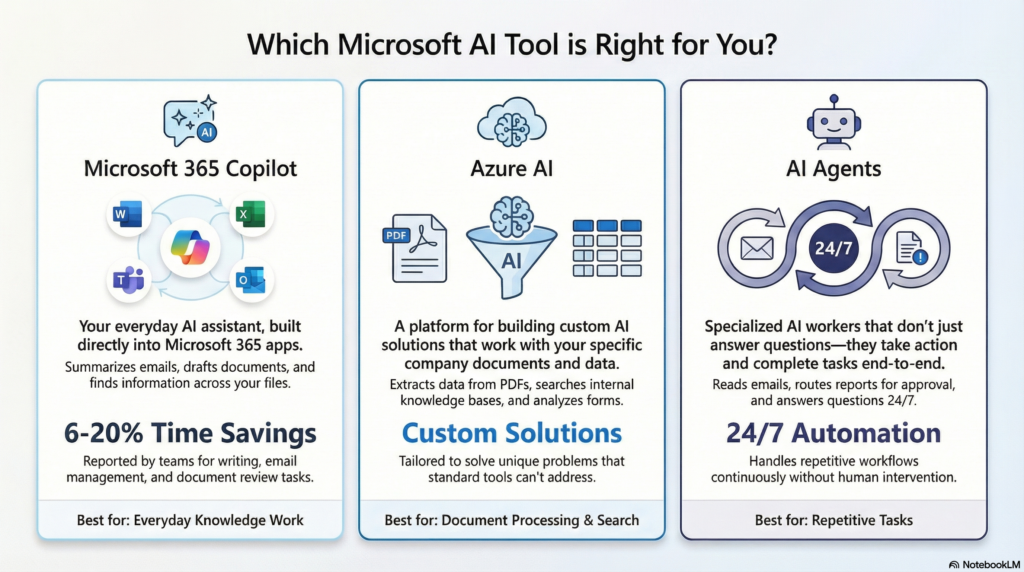

🧩Understanding Microsoft AI Tools

Microsoft 365 Copilot

What it is: An AI assistant built into Word, Excel, Teams, Outlook, and SharePoint.

What it does:

- Summarizes long email threads

- Drafts documents and reports

- Analyzes spreadsheets

- Finds information across your files and conversations

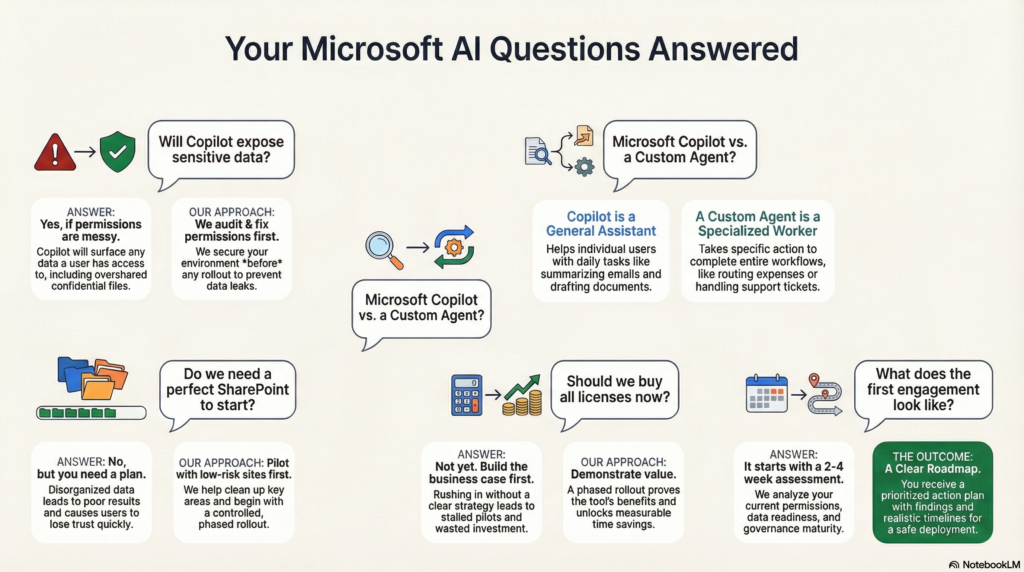

The catch: Copilot only sees what each user already has permission to see. If someone has access to a confidential folder by mistake, Copilot will show them that data when they ask.

Real example: A sales rep asks Copilot to “summarize all our pricing strategies.” If they have access to executive-only folders (maybe because someone shared a link once), Copilot will pull from those files too.

The payoff when done right: Teams report 6–20% time savings on writing, email management, and document reviews.

Azure AI (For Custom Solutions)

When you need it: Not every problem fits Copilot. Sometimes you need AI that:

- Searches your specific company documents

- Extracts data from PDFs automatically

- Analyzes images or forms

- Builds custom workflows

Common uses:

- Search your knowledge base – Let employees ask questions and get answers from your internal documentation

- Extract invoice data – Automatically pull line items, totals, and vendor details from invoices

- Process contracts – Find key terms, dates, and obligations across hundreds of agreements

Real example: A construction company uses Azure Document Intelligence to read safety inspection forms, extract findings, and automatically route them to the right project managers.

AI Agents (The Work Gets Done)

What they are: Specialized AI workers that complete tasks from start to finish. They don’t just answer questions—they take action.

What they do:

- Read incoming customer emails and send appropriate responses

- Route expense reports for approval

- Extract data from forms and file it in your system

- Answer employee questions 24/7

Two types:

- Agent Builder (no coding required) – Works with Microsoft 365 data, built right into Copilot

- Copilot Studio (low-code) – Connects to external systems, databases, and APIs for more complex workflows

Real example: A property management company built an agent that reads tenant maintenance requests, classifies them by urgency, checks if similar issues were reported recently, and either answers immediately or creates a work order.

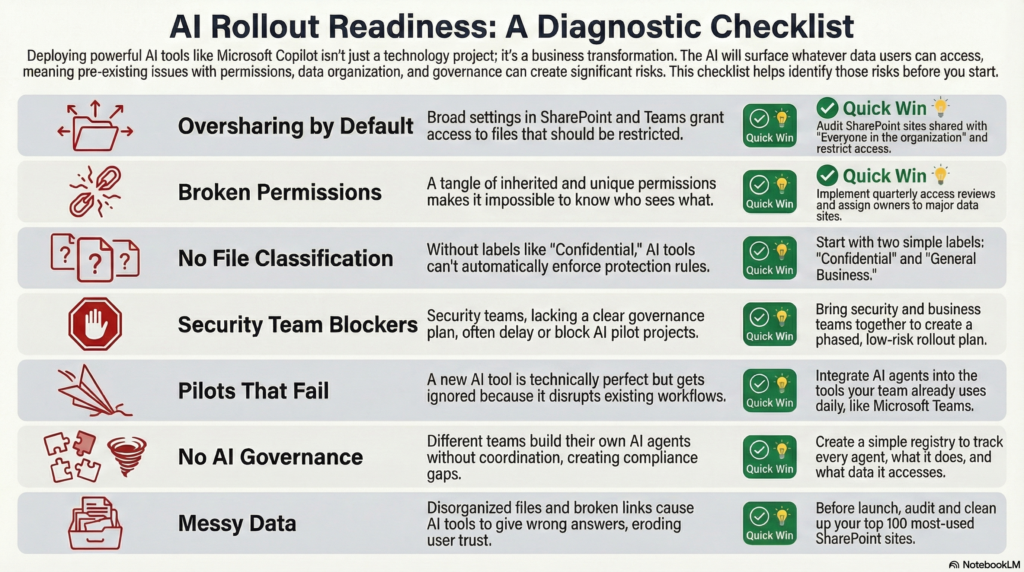

🧭Common Problems We Fix

🌍 Problem 1: Oversharing Through Default Settings

What happens: SharePoint sites set to “Everyone in the organization.” Teams channels shared too broadly. Old permission settings no one remembers changing.

Why it matters: Copilot will find and surface this data to anyone who asks. An employee searching for “salary” might see executive compensation files they shouldn’t have access to.

Quick win: Run a SharePoint audit to find sites shared with your entire company. Start with the top 20 and decide if everyone really needs access.

🛠️Problem 2: Broken Permission Inheritance

What happens: Sites inherit from company-wide groups. Then someone overrides folder permissions. Then individual files get unique permissions. Now no one knows who really sees what.

Common mistake: IT fixes permissions once but doesn’t monitor them. Within months, permissions drift back to chaos.

What to do next: Implement access reviews every quarter. Assign owners to each major SharePoint site who are responsible for keeping permissions clean.

🚀Problem 3: No File Classification System

What happens: Files aren’t labeled as Public, Internal, Confidential, or Restricted. Without these labels, Copilot and agents can’t enforce protection rules automatically.

Real example: A healthcare clinic had patient records mixed in with general admin files. No labels meant no automatic protection. When they deployed Copilot, any staff member could potentially search and find patient data.

Quick win: Start with just two labels—”Confidential” and “General Business.” Apply Confidential to files with customer data, financial records, or HR information. You can add more labels later.

📈Problem 4: Security Team Says “Not Yet”

What happens: Business teams are excited about Copilot. Security teams see risk. Pilots get blocked or delayed for months.

Why it happens: Security doesn’t have a clear plan for controlling data access, monitoring usage, or proving compliance.

What to do next: Bring security and business teams together early. Show them a phased approach: pilot with low-risk content first, measure results, add controls, then expand.

📈Problem 5: Pilots That Don’t Stick

What happens: The AI agent works perfectly in testing. But when you roll it out, nobody uses it.

Common reasons:

It disrupts how people actually work

Users weren’t trained properly

The workflow is technically correct but impractical

Real example: A company built an agent to answer IT support questions. But employees were used to calling the help desk directly. The agent sat unused until they integrated it into Teams where employees already worked.

📈Problem 6: No AI Governance

What happens: Different teams build agents without coordination. Some connect to sensitive systems. No one reviews them. No audit trail exists.

Why it matters: When compliance auditors ask “what AI tools are accessing customer data?” you can’t answer.

Quick win: Create a simple agent registry—a spreadsheet listing every agent, what it does, what data it accesses, and who owns it. Update it monthly.

📈Problem 7: Messy Data Kills Adoption

What happens: You launch Copilot, but your SharePoint is so disorganized that Copilot gives wrong answers or can’t find important documents. Users lose trust fast.

Common mistake: Treating AI deployment as an IT project instead of a data cleanup project.

What to do next: Before launching Copilot, audit your top 100 most-accessed SharePoint sites. Fix obvious issues—broken links, outdated files, confusing folder structures.

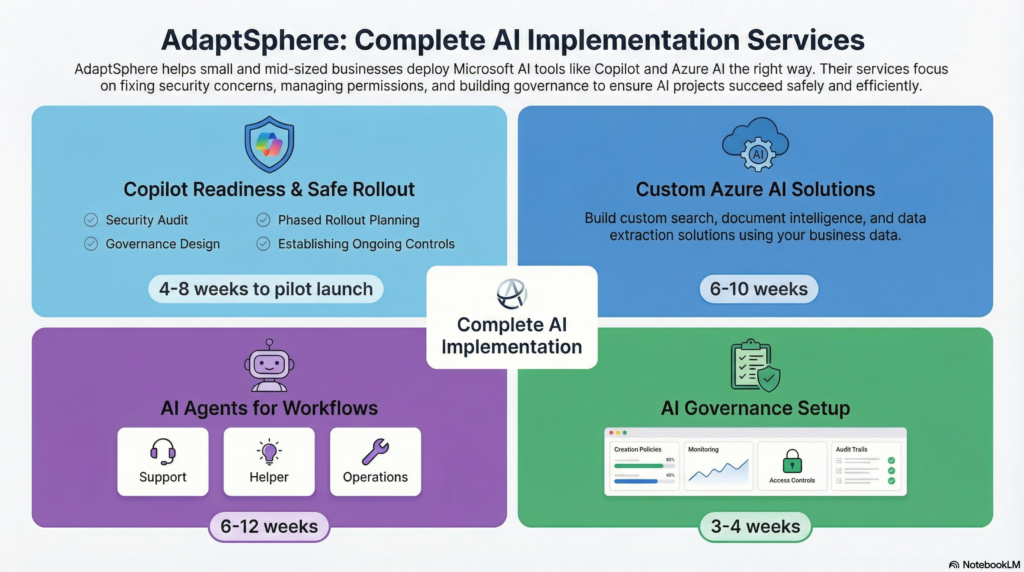

🧩Our Services

Copilot Readiness and Safe Rollout

Who it’s for: Organizations ready to deploy Microsoft 365 Copilot but need to fix permissions and governance first.

What We Do

- Audit your environment – We check SharePoint, Teams, and OneDrive for oversharing and permission problems

- Design governance – Set up sensitivity labels, data loss prevention rules, and access reviews

- Plan your rollout – Start with low-risk content and power users, then expand gradually

- Establish ongoing controls – Make sure governance continues after launch

What You Get

- Oversharing report with specific fixes needed

- Sensitivity label plan ready to deploy

- Phased rollout schedule (which teams, which content, what timeline)

- Monitoring tools to catch new permission problems

Timeline: 4–8 weeks from kickoff to pilot launch. 2–3 months for company-wide rollout.

Real example: A legal services firm with 150 employees wanted Copilot but had 200+ SharePoint sites with unclear permissions. We identified 40 sites with client data that were overshared, implemented sensitivity labels for client files, and piloted Copilot with their administrative staff first. After proving it was safe, they rolled out to attorneys within 3 months.

Azure AI Solution Build

Who it’s for: Organizations ready to deploy Microsoft 365 Copilot but need to fix permissions and governance first.

Common Solutions We Build

Retrieval-Augmented Generation (RAG)

- AI that searches your actual company documents and cites sources

- Employees ask questions in natural language and get accurate answers

- Example: “What’s our policy on remote work equipment reimbursement?”

Azure AI Search

- Combines keyword and semantic search across your knowledge base

- Understands intent, not just exact word matches

- Example: Searching “laptop broke” also finds documents about “computer repair” and “hardware replacement”

Document Intelligence

- Automatically extracts data from invoices, contracts, forms, and PDFs

- Example: Process 500 vendor invoices, extract amounts and dates, route for approval

What You Get

- Architecture design and vendor recommendations

- Document processing pipeline (how files get indexed)

- Search tuned to your industry terminology

- Documentation so your team can maintain it

Timeline: 6–10 weeks depending on data volume. Quick win delivered in weeks 2–3. Production-ready by week 10.

Real example: A medical device distributor needed to search across 10 years of product documentation, safety data sheets, and compliance reports. We built a RAG system that lets their sales team ask questions like “Which products are approved for use in surgical centers?” and get instant answers with source citations.

AI Agents for Workflows

Who it’s for: Teams with repetitive work—customer support, HR questions, finance approvals, IT help desk, document routing.

Agent Types We Build

Support Agents

- Classify incoming tickets by type and urgency

- Retrieve answers from your FAQ and knowledge base

- Escalate complex issues to humans

- Example: A retail company’s agent handles 60% of customer service questions about order status, returns, and store hours

Internal Helper Agents

- Answer employee questions about HR policies, IT procedures, or finance rules

- Available 24/7 in Teams or email

- Example: An agent that answers questions about PTO policies, benefits enrollment, and expense report procedures

Operations Agents

- Triage documents and route them to the right people

- Process approvals and follow up on delays

- Extract structured data from unstructured documents

- Example: An agent that reads supplier contracts, extracts payment terms and renewal dates, and adds them to a tracking database

What You Get

- Working agent deployed in your environment

- Documentation of how it makes decisions

- Training for the team that will monitor and update it

- Clear handoff—who owns this agent going forward

Timeline: 6–12 weeks depending on complexity. Simple agents (FAQ, ticket classification) ready in 6–8 weeks.

Real example: A nonprofit with 80 staff members spent 10 hours per week answering repetitive HR and IT questions over email. We built a Teams agent that employees can ask questions like “How do I request vacation time?” or “My laptop won’t connect to wifi.” The agent handles 70% of questions, saving the HR and IT teams hours every week.

AI Governance Setup

Who it’s for: Organizations deploying Copilot or agents who need governance that’s real but not overwhelming.

What We Set Up

- Agent creation policy – Who can build agents, approval process, business justification required

- Inventory and monitoring – Track what agents exist and what they do

- Access control – Define which agents can connect to which systems

- Audit trail – Compliance documentation for regulators and auditors

What You Get

- AI governance policy document (creation, approval, data access, retirement rules)

- Governance dashboard showing all agents, usage, and compliance status

- Access control policies scoped to AI apps

- Quarterly review process and escalation paths

Timeline: 3–4 weeks to define and deploy initial governance. Then 30 minutes per new agent to classify and add controls.

Real example: A financial services company needed to deploy Copilot while proving to regulators they were protecting customer data. We created a governance framework showing exactly which data Copilot could access, who monitored it, and how they would respond to a data breach. They passed their audit without issues.

🧭How We Work Together

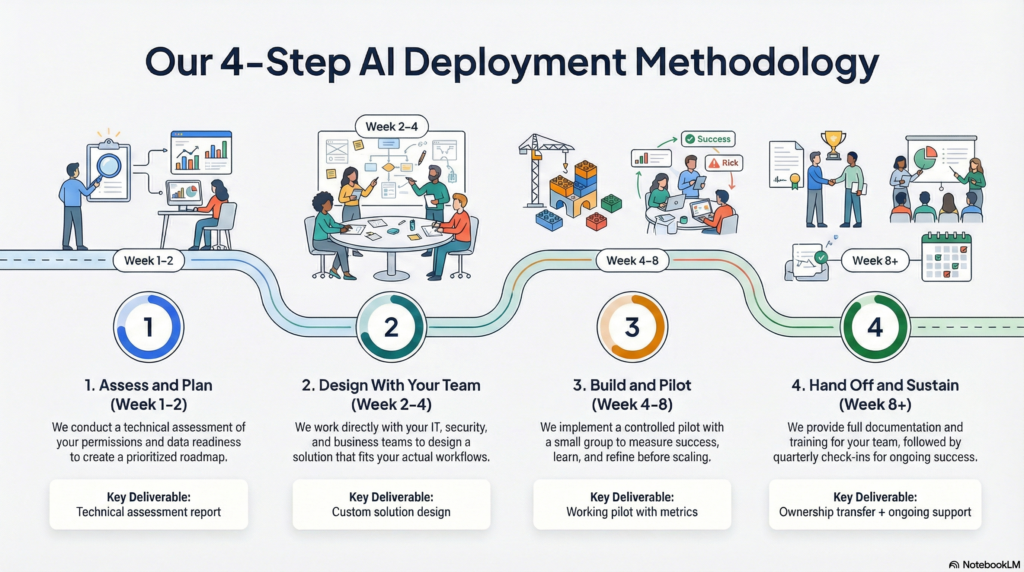

🌍 Step 1: Assess and Plan

We start with a conversation about your Microsoft 365 setup, your AI goals, and your constraints (timeline, budget, compliance requirements).

We run a technical assessment—checking permissions, data readiness, and governance maturity.

You get a report with findings and a prioritized roadmap with realistic timelines.

🛠️Step 2: Design With Your Team

We don’t disappear to build in isolation. Your IT, security, and business owners work with us to design solutions that fit your actual workflows.

We show you trade-offs and options, not just “best practices” that don’t fit your situation.

🚀Step 3: Build and Pilot

We implement in a controlled way—pilot first with a small group, learn what works, refine, then scale.

You control the pace. We provide clear success metrics so you know if it’s working.

📈Step 4: Hand Off and Sustain

We document everything so your team owns it. We train the people who will maintain it.

Then we check in quarterly to catch problems, celebrate wins, and tackle the next priority.

🧩What Success Looks Like

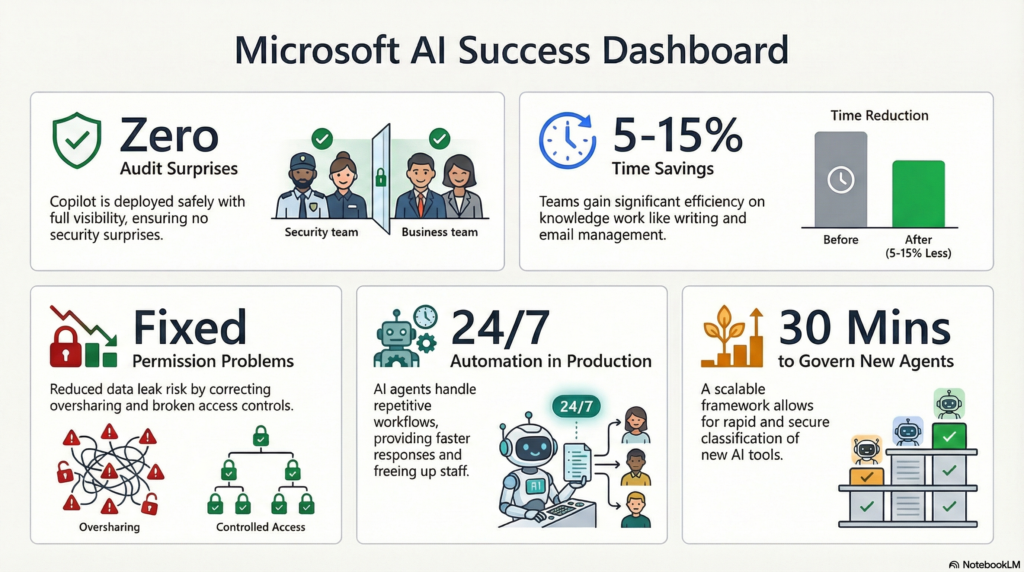

Copilot deployed safely Your team gets a productive AI assistant. Your security team knows who sees what and why. No surprises in an audit.

Measurable time savings Less time searching for files, writing routine emails, and formatting reports. Realistic expectation: 5–15% time savings on those specific tasks. Scaled across your team, that adds up.

Reduced risk of data leaks Permission inheritance fixed. Sensitivity labels applied. Data loss prevention policies enforced. Audit trails in place.

AI agents running in production Handling customer support, internal requests, or document workflows. Customers or employees get faster responses. Your team focuses on higher-value work.

Governance that scales New agents get reviewed and classified. Data access is controlled. You can explain your AI usage to auditors confidently.

🧩Frequently Asked Questions

🔥Ready to Get Started?

Microsoft AI is powerful, but it’s not a switch you flip. The organizations that succeed treat it as a business transformation, not just a technology project.

They start small, measure everything, and build governance as they go.

If that approach makes sense to you, let’s talk.

Adaptsphere helps small and mid-sized businesses deploy Microsoft AI tools safely and effectively. We specialize in Microsoft 365 Copilot rollouts, Azure AI solutions, custom agent development, and AI governance frameworks.